Accelerating Saccadic Response through Spatial and Temporal Cross-Modal Misalignments

SIGGRAPH 2024

Daniel Jiménez Navarro1, Xi Peng2, Yunxiang Zhang2, Karol Myszkowski1, Hans-Peter Seidel1, Qi Sun2, Ana Serrano3

1Max-Planck-Institut für Informatik 2New York University 3University of Zaragoza

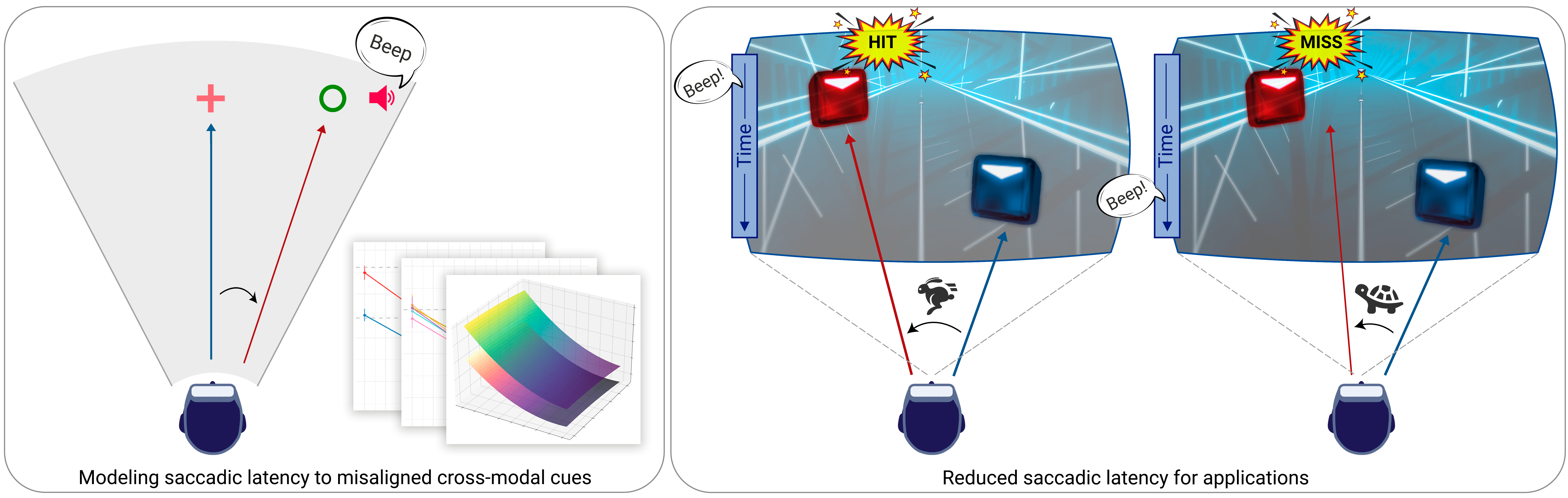

Teaser

Human senses and perception are our mechanisms to explore the external world. In this context, visual saccades --rapid and coordinated eye movements-- serve as a primary tool for awareness of our surroundings. Typically, our perception is not limited to visual stimuli alone but is enriched by cross-modal interactions, such as the combination of sight and hearing.

In this work, we investigate the temporal and spatial relationship of these interactions, focusing on how auditory cues that precede visual stimuli influence saccadic latency --the time that it takes for the eyes to react and start moving towards a visual target. Our research, conducted within a virtual reality environment, reveals that auditory cues preceding visual information can significantly accelerate saccadic responses, but this effect plateaus beyond certain temporal thresholds. Additionally, while the spatial positioning of visual stimuli influences the speed of these eye movements, as reported in previous research, we find that the location of auditory cues with respect to their corresponding visual stimulus does not have a comparable effect.

To validate our findings, we implement two practical applications: first, a basketball training task set in a more realistic environment with complex audiovisual signals, and second, an interactive farm game that explores previously untested values of our key factors. Lastly, we discuss various potential applications where our model could be beneficial.